Kubernetes Decoded: Unlocking the Secrets of Architecture, Components, Installation, and Configuration

Day : 1

Challenges / Problems before Kubernetes.

Before Kubernetes, managing containerized applications at scale was a complex and challenging task. Here are some of the problems that developers and operations teams faced before Kubernetes:

Manual Orchestration: In the early days of containerization, teams had to manually orchestrate containerized applications by creating scripts or using configuration management tools. This approach was time-consuming, error-prone, and not scalable.

Vendor Lock-in: Most container orchestration solutions were tightly coupled to a specific cloud provider or infrastructure, which made it difficult to migrate workloads to different environments.

Lack of Portability: Containerized applications often had dependencies on specific versions of software libraries or tools, which made it challenging to ensure consistency across different environments.

Limited Resource Management: Without a centralized system for managing resources, it was difficult to optimize resource usage and ensure that applications had access to the resources they needed.

Inconsistent Deployment: Teams often faced issues with inconsistent deployments, especially when deploying applications across multiple nodes or environments.

Kubernetes addressed these problems by providing a robust, flexible, and portable platform for managing containerized applications. It abstracts away the complexity of managing containers and provides a consistent interface for deploying and managing applications across different environments. It also provides features for resource management, automatic scaling, and self-healing, which make it easier to operate and manage containerized applications at scale.

Why Kubernetes?

Kubernetes has become the de facto standard for container orchestration, and there are several reasons why it has gained widespread adoption in the industry:

Scalability: Kubernetes allows you to easily scale your containerized applications up or down as needed. You can use it to manage applications running on a few containers or thousands of containers spread across multiple nodes.

Resilience: Kubernetes ensures that your applications are highly available and resilient to failures by providing features like automatic failover, self-healing, and rolling updates.

Portability: Kubernetes is designed to be platform-agnostic, which means that you can deploy your applications on any cloud provider, on-premises data center, or hybrid infrastructure.

Efficiency: Kubernetes helps you optimize resource utilization by scheduling containers onto the nodes that have the necessary resources, and by automatically scaling the number of replicas based on the current demand.

Ecosystem: Kubernetes has a large and growing ecosystem of tools and plugins that make it easy to integrate with other tools, automate workflows, and extend the functionality of Kubernetes.

Overall, Kubernetes provides a powerful platform for managing containerized applications at scale, and it has become a critical component of modern application development and deployment.

Kubernetes Architecture and Components.

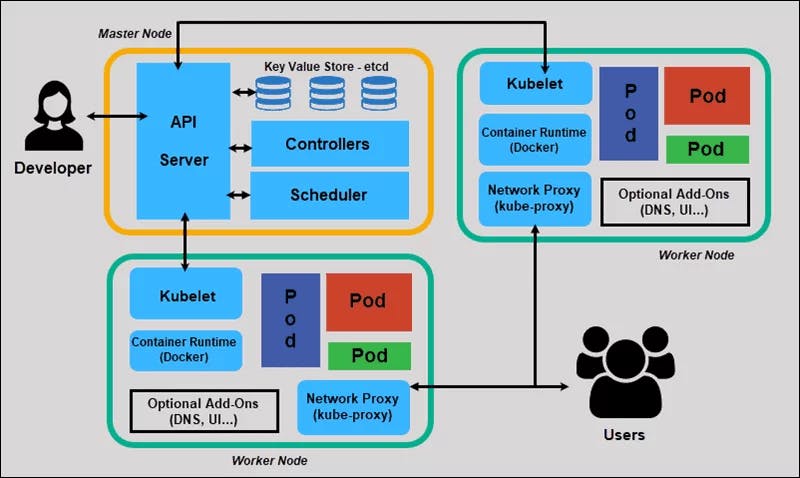

Kubernetes architecture is composed of a set of interacting components that work together to provide a platform for deploying, scaling, and managing containerized applications. Here are the key components of the Kubernetes architecture:

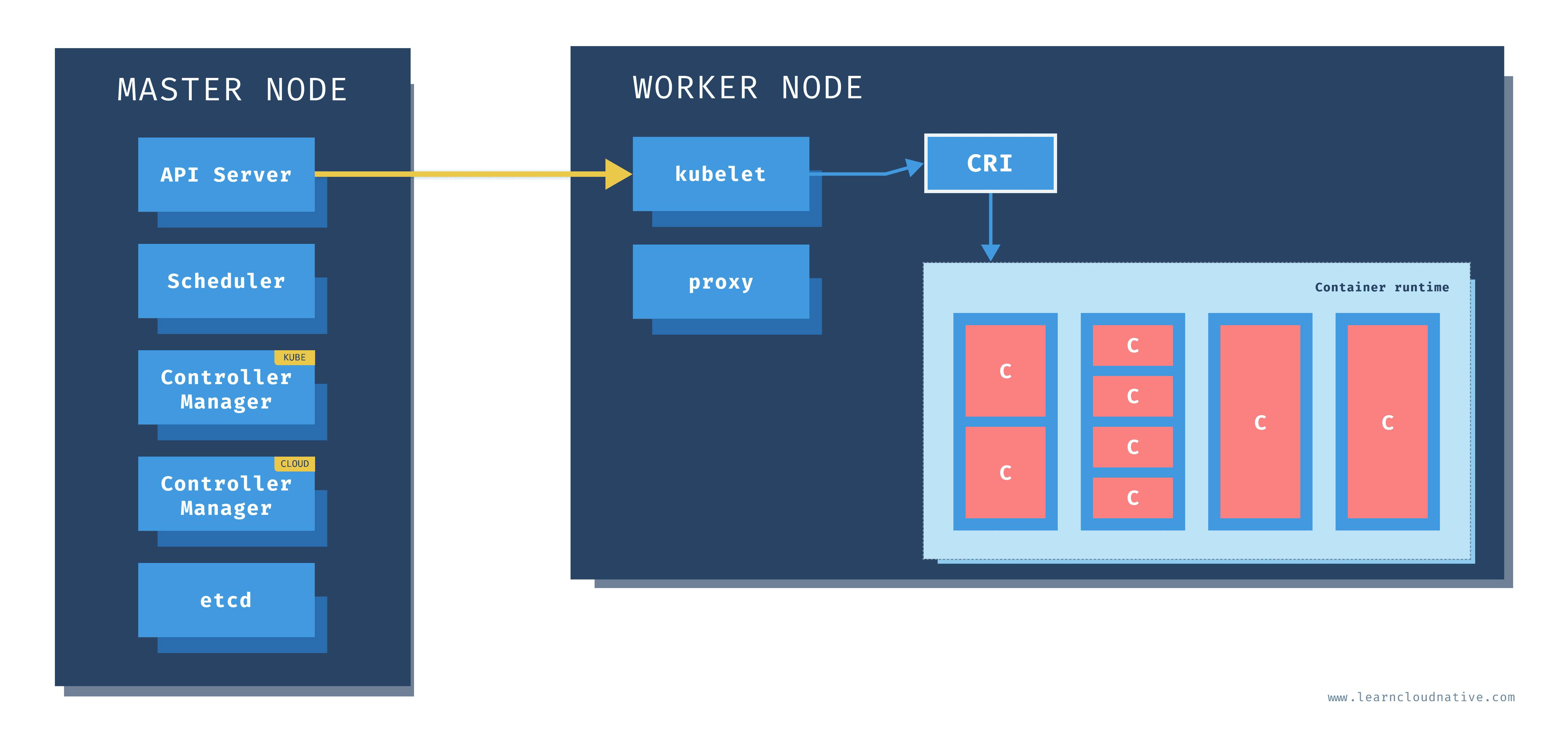

Master components: The master components are responsible for managing the Kubernetes cluster. The master components include the API server, etcd, kube-scheduler, and kube-controller-manager.

Node components: The node components are responsible for running the containerized applications. The node components include the kubelet, kube-proxy, and container runtime.

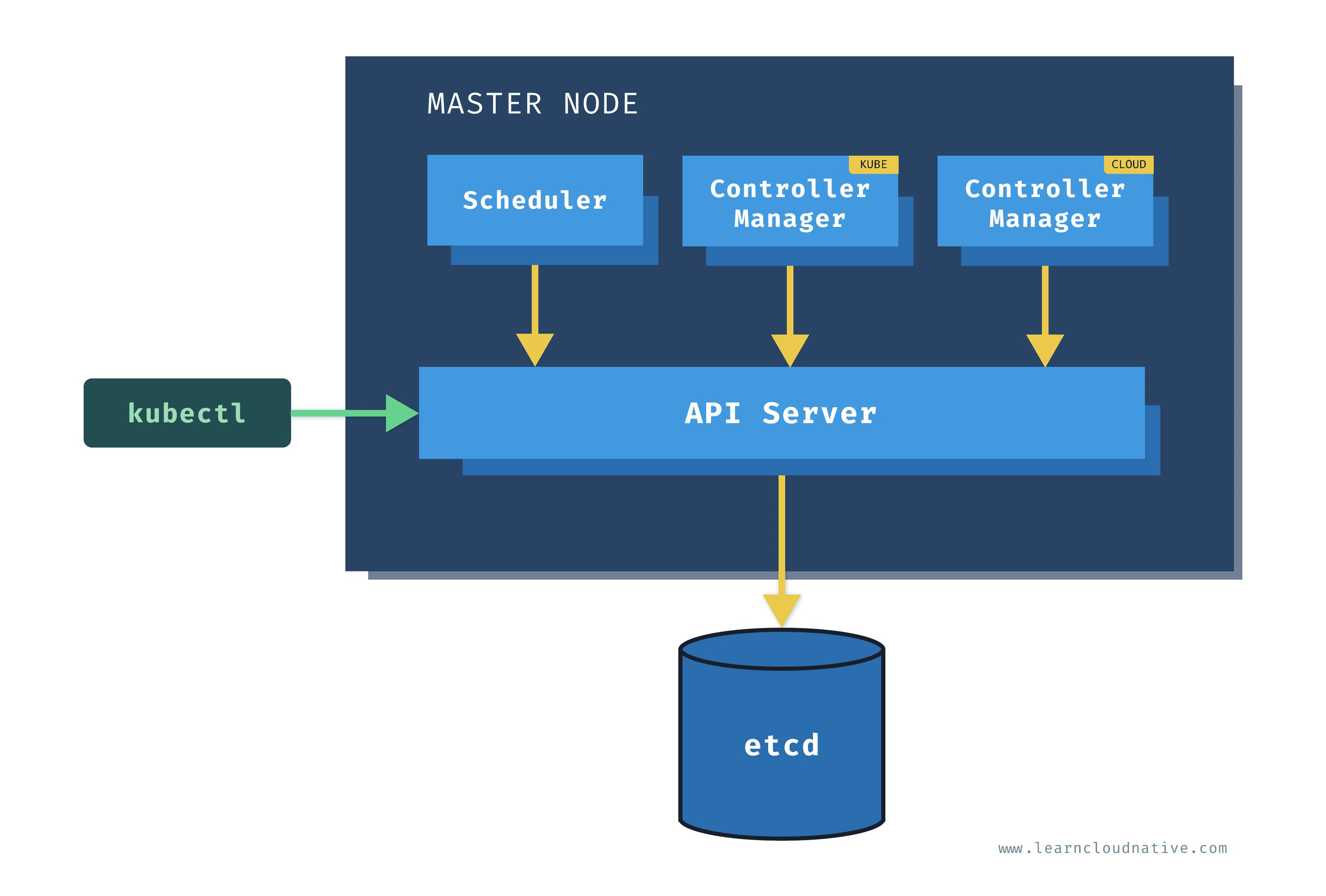

Master components:

API Server: The API server is the central control plane component of Kubernetes that exposes the Kubernetes API, which enables users to manage the cluster. It validates and processes REST requests and updates the state of objects in etcd, the cluster's distributed database.

etcd: etcd is a distributed key-value store that stores the configuration data of the Kubernetes cluster. It provides a reliable and highly available data store for Kubernetes.

Scheduler: The scheduler is responsible for scheduling pods (the smallest deployable units in Kubernetes) onto nodes in the cluster. It considers factors such as resource availability, affinity/anti-affinity rules, and workload balancing when making scheduling decisions.

Controller Manager: The controller manager is responsible for managing the various controllers that watch over the state of the cluster and ensure that the desired state is maintained. Examples of controllers include the ReplicaSet controller, which ensures that the specified number of pod replicas is always running, and the Service controller, which creates and manages network services for pods.

Node components:

Kubelet: The Kubelet is an agent that runs on each node and communicates with the API server to receive instructions for running containers. It ensures that the containers are running as expected and reports back to the API server about the container's status.

Container Runtime: The container runtime is responsible for running the containers on the node. Kubernetes supports multiple container runtimes, including Docker, Containerd, and CRI-O.

kube-proxy: The kube-proxy is responsible for implementing Kubernetes service abstractions by maintaining network rules on each node. It routes traffic to the correct pod based on the service IP address and port number.

Pod: The pod is the smallest and simplest unit in the deployment model. It is a logical host for one or more containers that share the same network namespace and storage volumes. A pod represents a single instance of a running process in a cluster and can contain one or more containers.

Add-ons: Add-ons are optional components that extend the functionality of Kubernetes. Examples of add-ons include the Kubernetes Dashboard, DNS, and monitoring tools.

Kubernetes Installation and Configuration.

To install and configure Kubernetes, you can use one of the following methods:

Kubernetes the Hard Way: This method involves manually configuring each component of the Kubernetes architecture. It is a good option if you want to learn how Kubernetes works under the hood.

Minikube: Minikube is a tool that enables you to run a single-node Kubernetes cluster on your local machine. It is an easy way to get started with Kubernetes without having to set up a full cluster.

Kubernetes on a Cloud Provider: Most cloud providers offer managed Kubernetes services that enable you to deploy and manage Kubernetes clusters without having to worry about the underlying infrastructure.

Once you have installed Kubernetes, you can configure it to meet your specific needs. Some common configuration tasks include:

Creating namespaces to isolate applications.

Configuring networking to enable communication between pods and services.

Defining resource quotas to limit resource usage by namespaces or applications.